Light 👉🏽 Sound

Fall 2017

What if, instead of navigating the world primarily via perception of light through our eyes, we had to "see" with sound?

This object is an exploration into my intrigue over human perception. Specifically, I wonder why and how our perception is composed of a mix of different "energy sensors," such as eyes, ears and skin (tactile), and how that influences the way we navigate the world. In order to investigate, I explored what it would be like if the physics of perception were different. What if we could "hear" sonic energy with our skin, "smell" aromas with our eyes, or "see" light with our ears.

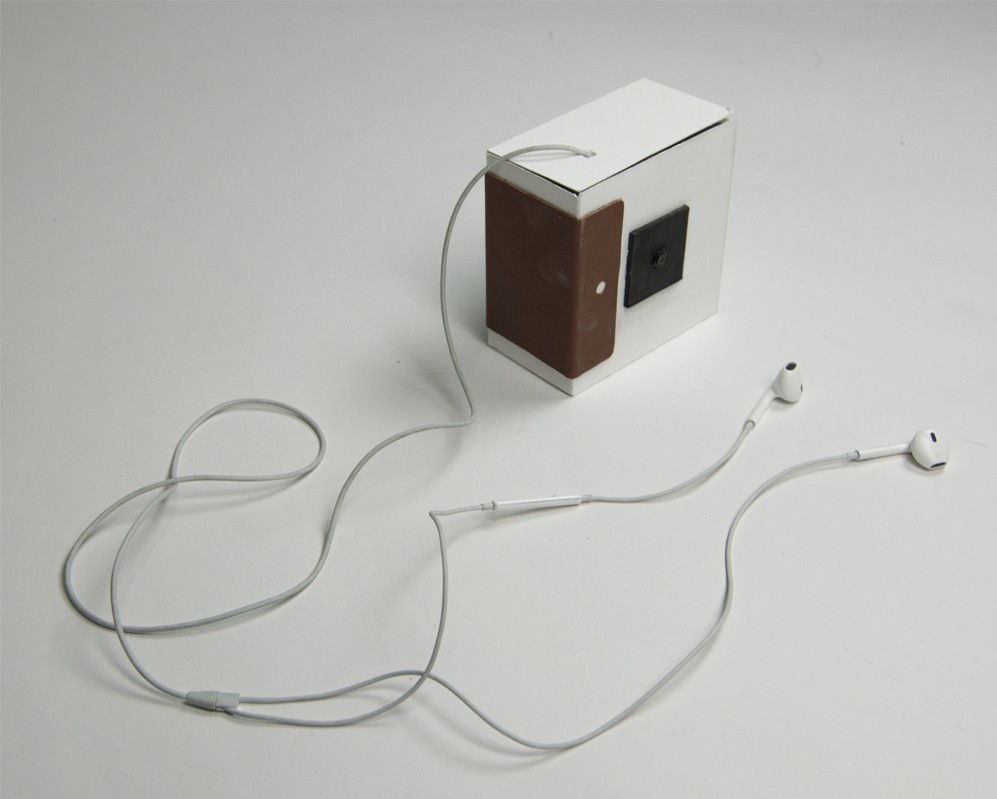

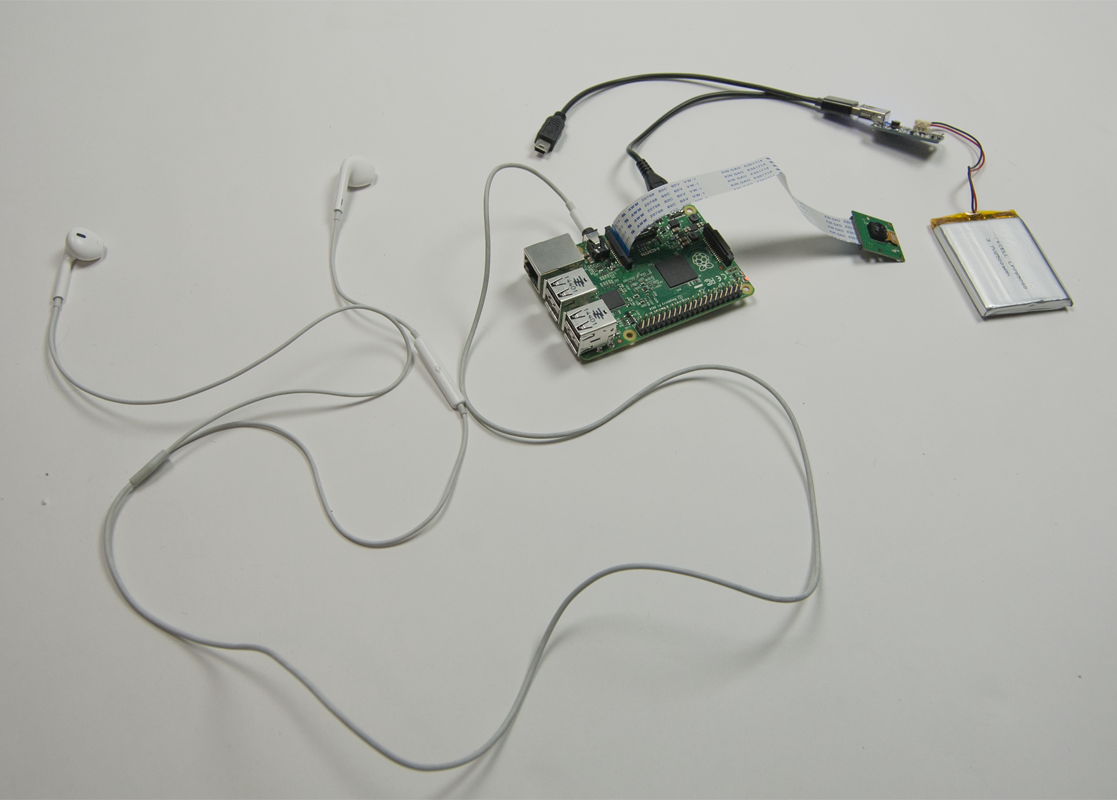

The resulting object, pictured below, is a "camera" which converts what you see into sound. Specifically, the hue and brightness of light are transformed into a frequency and volume of sound. The resulting pulses of sound transmitted to the wearers ears are a representation of the color and relative darkness or lightness of where they are pointing the camera.

Construction

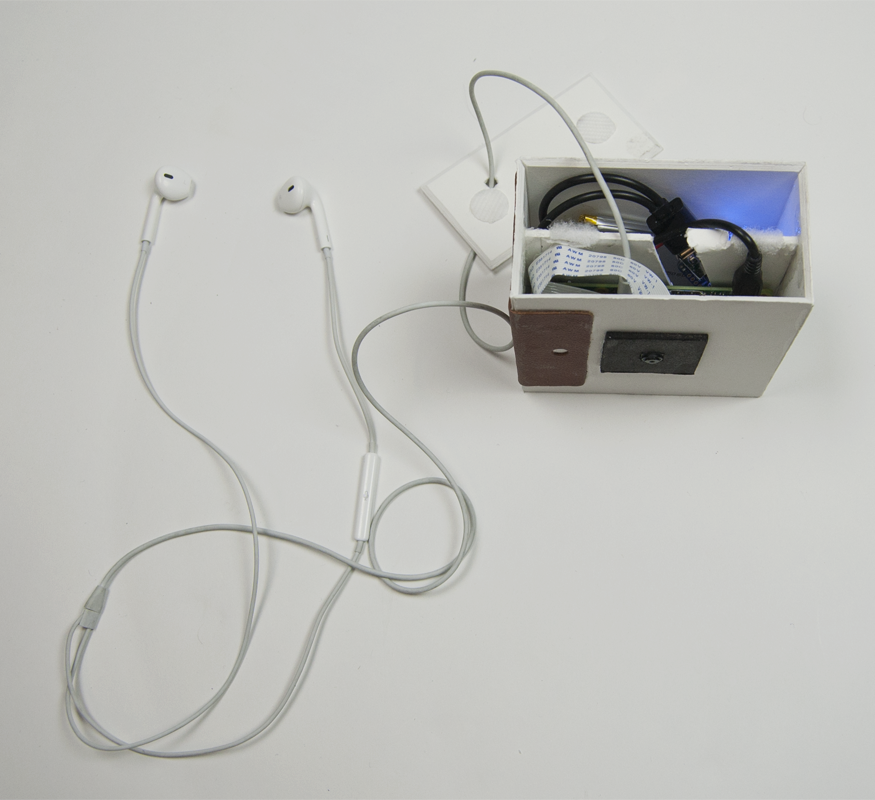

This project was my first experience programming in C. Most of the work involved taking input from the Raspberry Pi's camera module and transforming it into a format that could be output as sound. A challenging aspect of this was managing the difference between the frame rate of the camera and the sample rate of the audio library.

To transform the camera input into sound, I averaged all pixel data from the camera then calculated a single hue value as a combination of the red, green, and blue channels of the pixel data. The hue value was then mapped into a wave frequency within the human audible range, and the alpha value (light vs dark), also averaged from all pixels of the camera, was mapped to a volume range. The resulting sound is a continuous tone that gets higher as you point the camera towards warmer colors, lower when pointed towards cooler colors, quieter in dark spaces, and louder in bright spaces.

If I were to push my design further, I would love to make the program recognize multiple colors that make up an image and layer multiple resulting frequencies and potentially rhythms on top of eachother to create a more complex sound.

To house the electronic components, I constructed a low cost box out of white core board. It's top is secured with velcro for easy access to the internals. On the exterior, there is a leather pad that serves as a hand hold.

Components

- Raspberry Pi

- Raspberry Pi Camera Module

- Lithium Ion battery

- Power Converter

- Apple Headphones

- Velcro

- Glue

- White core board